NeurIPS, ‘22

Does GNN Pre-training Help Molecular Representation?

Summary

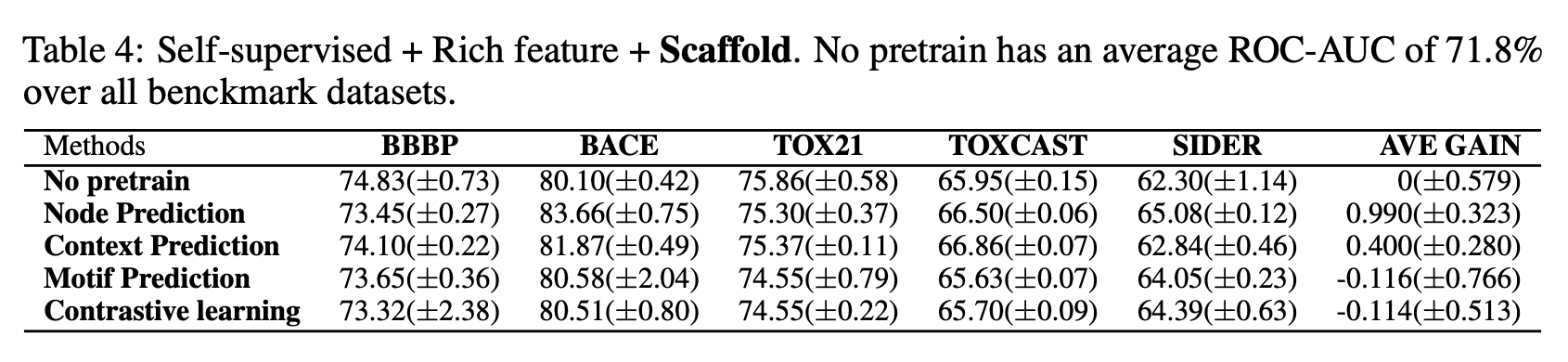

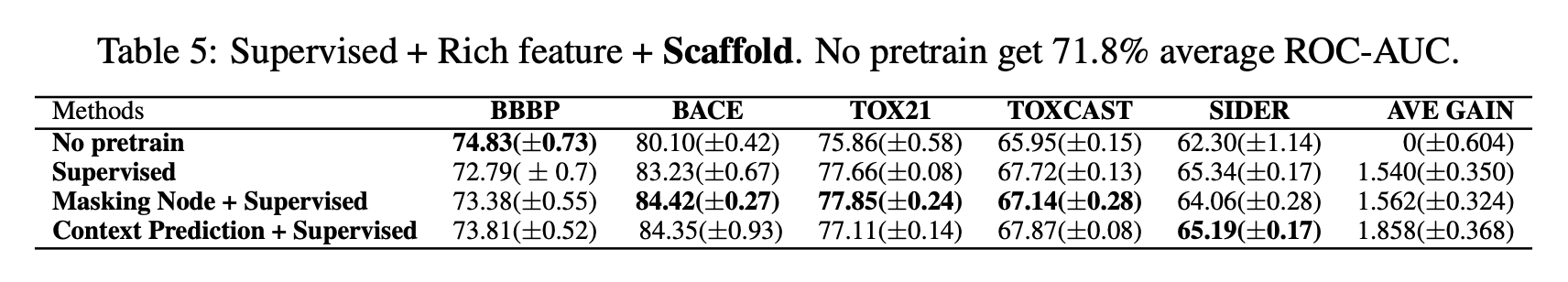

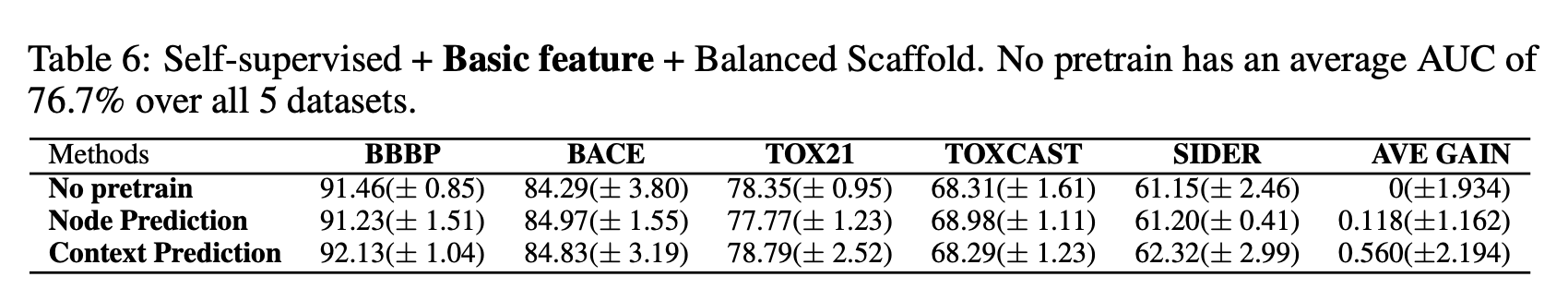

- Self-supervised pre-training alone does not provide statistically significant improvements over non-pre-trained methods on downstream tasks.

- Data splits, hand-crafted rich features, or hyperparameters can bring significant improvements.

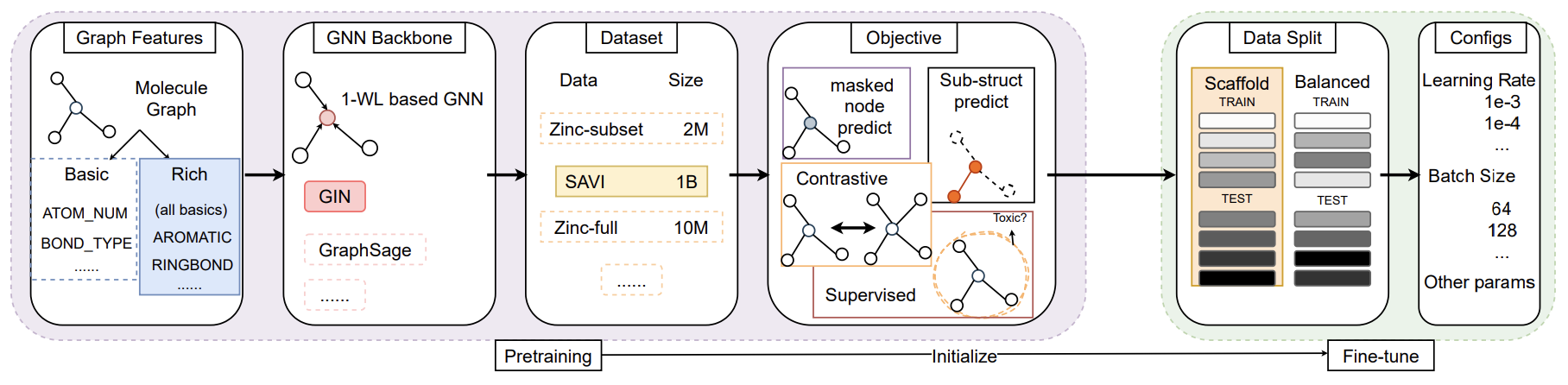

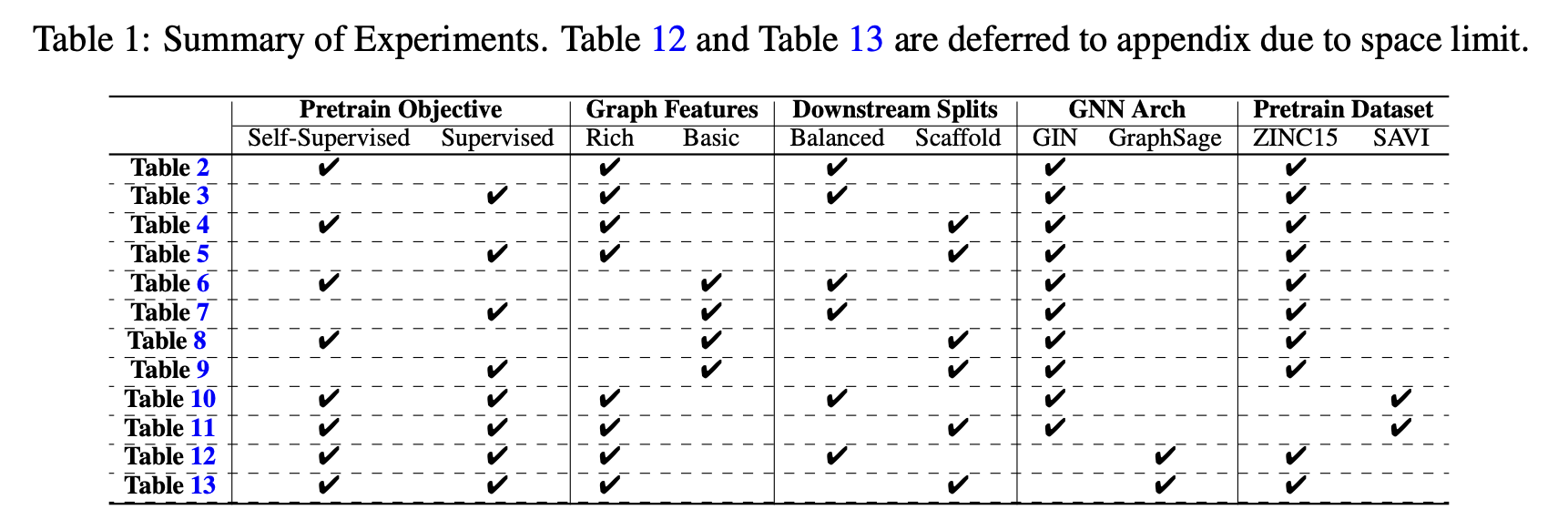

Preliminaries

Pre-train objectives

Pre-train objectives

- Self-supervised

- Node prediction

- Context prediction

- Motif prediction

- Contrastive learning

- Supervised

- Related tasks with label (e.g. ChEMBL dataset)

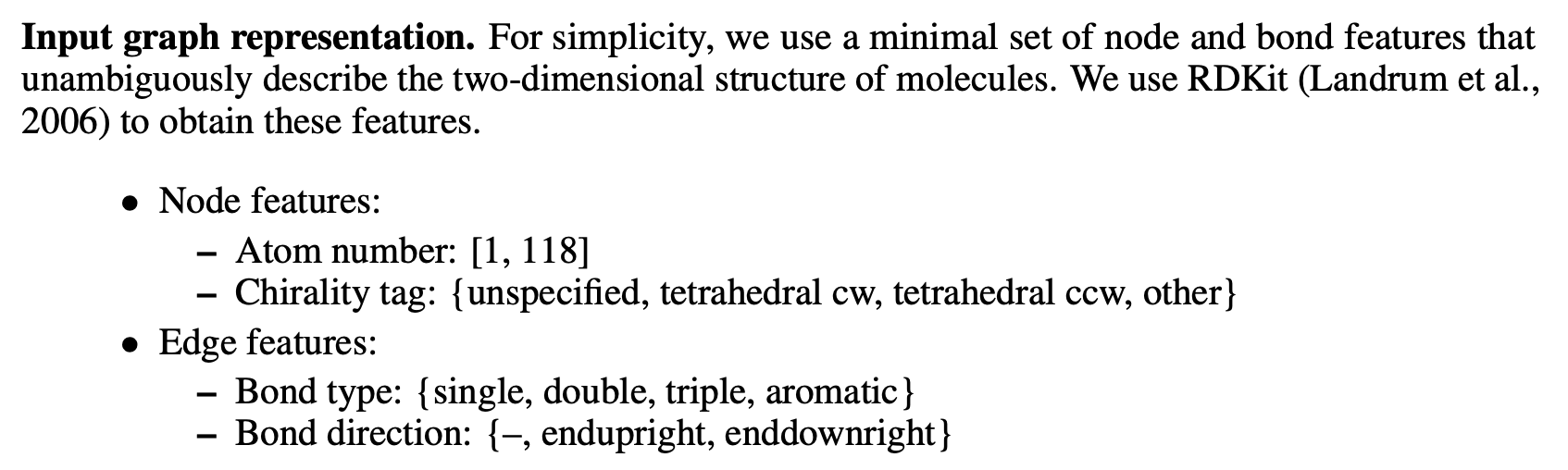

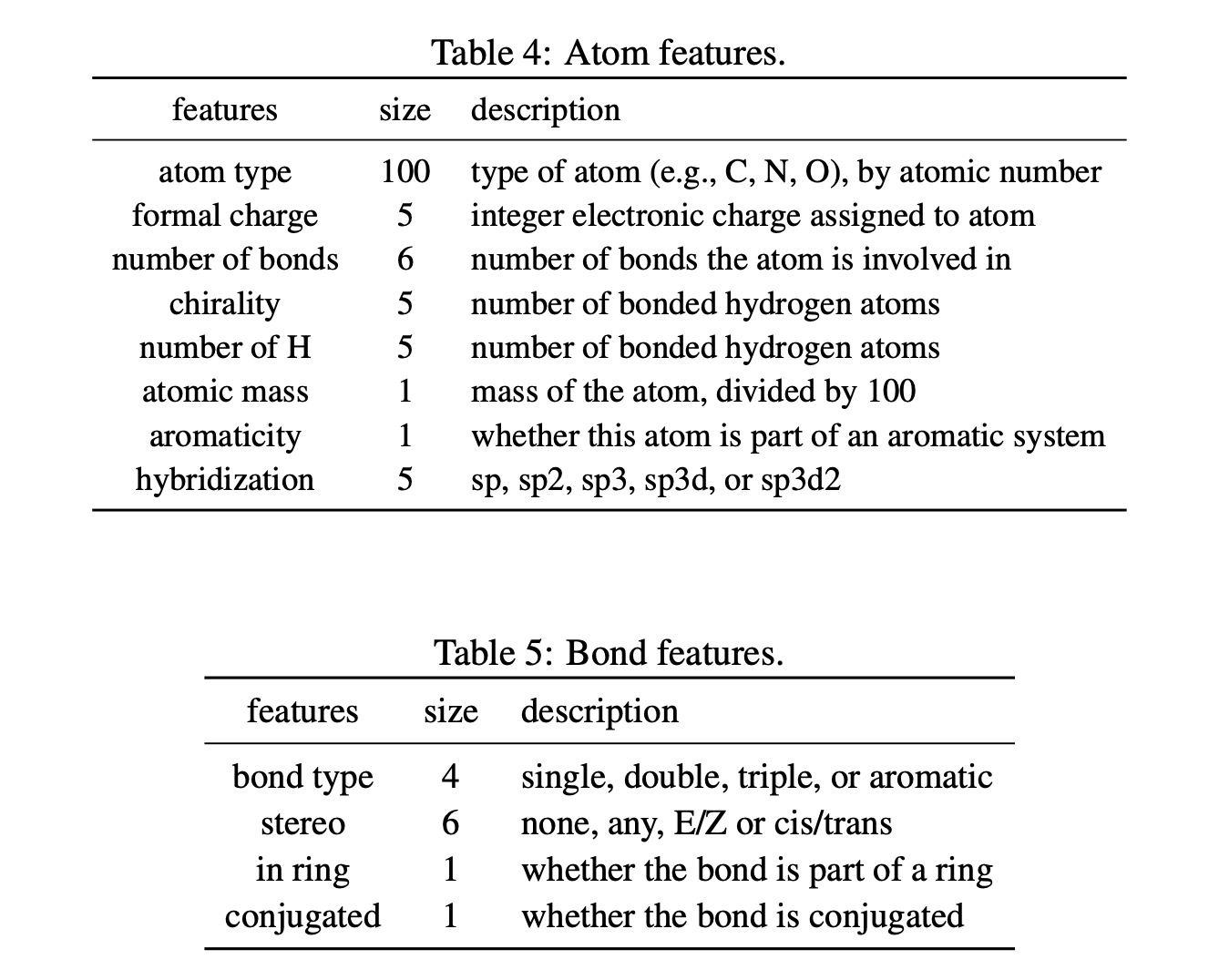

Graph features

-

Basic Feature set used in Hu et al.

-

Rich

Feature set used in Rong et al. This is the superset of basic features.

In downstream tasks, additional 2d normalized

In downstream tasks, additional 2d normalized rdNormalizedDescriptorsare used (not in pre-training).

Downstream splits

-

Scaffold

Sorts the molecule according to the scaffold, then partition the sorted list into train/valid/test splits. → Deterministic

Molecules of each set are most different ones.

-

Balanced scaffold

Introduces the randomness in the sorting and splitting stages of Scaffold split.

GNN architecture

- GIN

- GraphSAGE

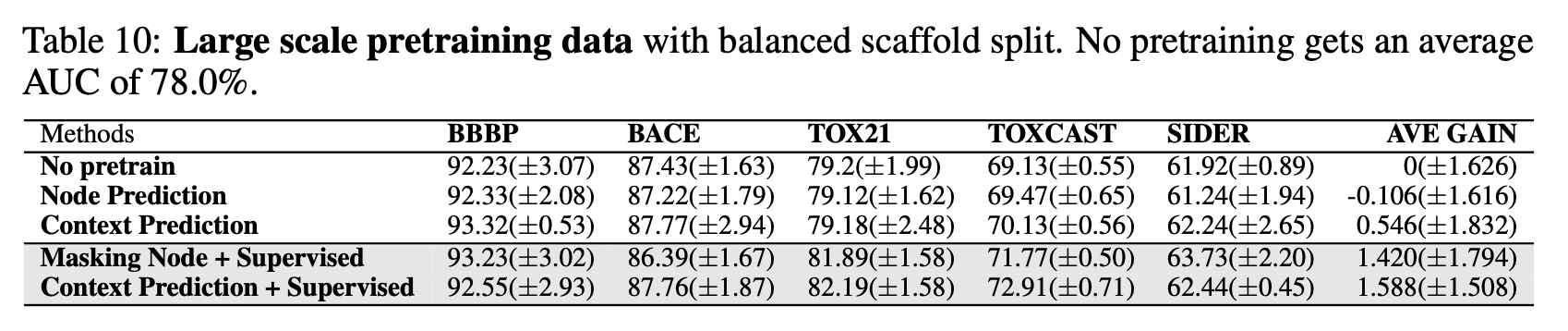

Pre-train dataset

-

ZINC15 (self-supervised)

2 million molecules. Pre-processed following Hu et al.

-

SAVI (self-supervised)

1 billion drug-like molecules synthesized by computer simulated reactions.

-

ChEMBL (supervised)

500k drugable molecules with 1,310 prediction target labels from bio-activity assays.

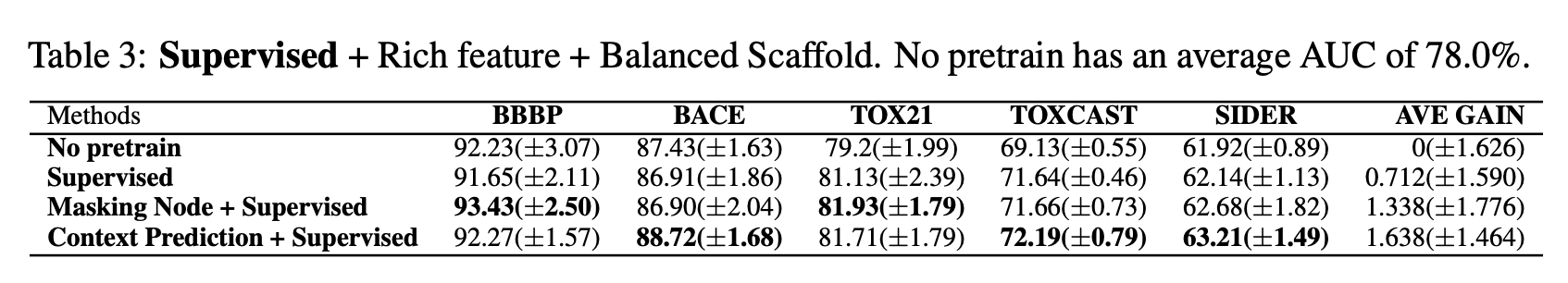

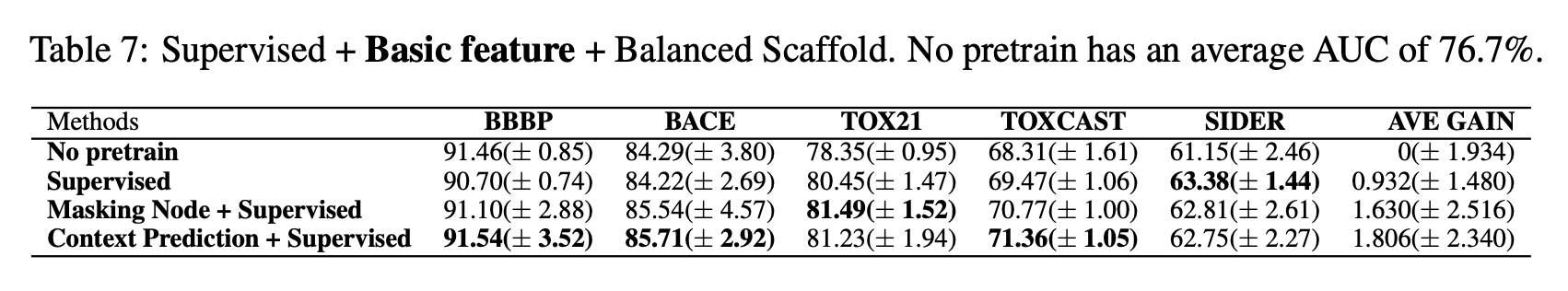

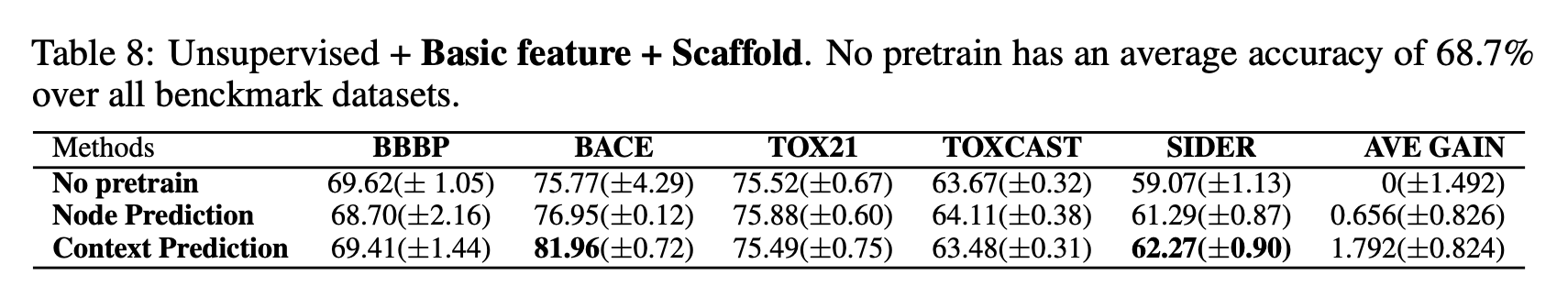

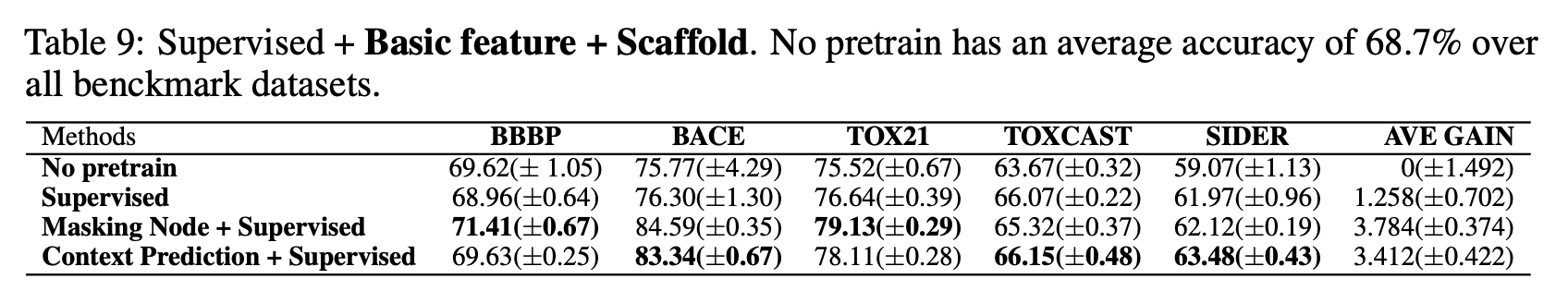

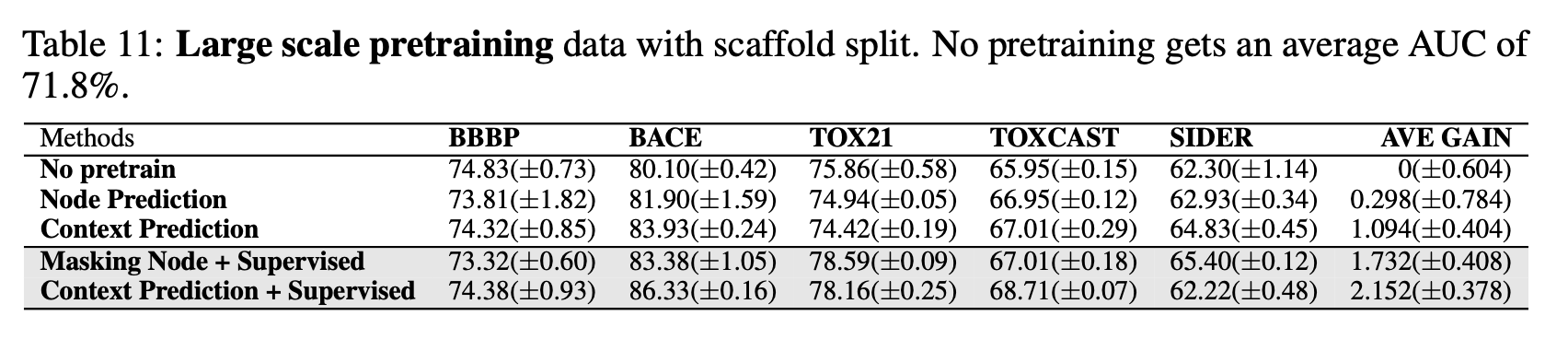

Results

Key Takeaways

When pre-training might help?

- Related supervised pre-training dataset. But not always feasible.

- If the rich features are absent.

- If the downstream split distributions are substantially different.

When the gain dimishes?

- If using rich features.

- If don’t have the highly relevant supervisions.

- If the downstream split is balanced.

- If the self-supervised learning dataset lacks diversity.

Why pre-training may not help in some cases?

Some of the pre-training methods (e.g. node label prediction) might be too easy

→ Transfer less knowledge.

So…

- Use rich features

- Use balanced scaffold

- Use related supervised pre-training dataset

- Use difficult pre-training task (for self-supervised pre-training) and use high-quality negative samples.